WASHINGTON (May 31, 2024) – OpenAI said this week it caught accounts with well-known propaganda operations in Russia, China, Iran and Israel using its technology to try to influence political discourse around the world. According to The Washington Post, the groups used OpenAI’s tech to write posts, translate them into various languages and build software that helped them automatically post to social media.

Faculty experts at the George Washington University are available to offer insight, context and analysis on a number of topics related to AI, elections and politics, and misinformation, including efforts to regulate AI, the importance of building trustworthy AI, and the impact misinformation can have on elections and politics, in the U.S. but also globally. If you would like to speak with an expert, please contact GW Media Relations Specialists Shannon Mitchell at shannon [dot] mitchell gwu [dot] edu (shannon[dot]mitchell[at]gwu[dot]edu) and Cate Douglass at cdouglass

gwu [dot] edu (shannon[dot]mitchell[at]gwu[dot]edu) and Cate Douglass at cdouglass gwu [dot] edu (cdouglass[at]gwu[dot]edu).

gwu [dot] edu (cdouglass[at]gwu[dot]edu).

Misinformation & Trustworthy AI

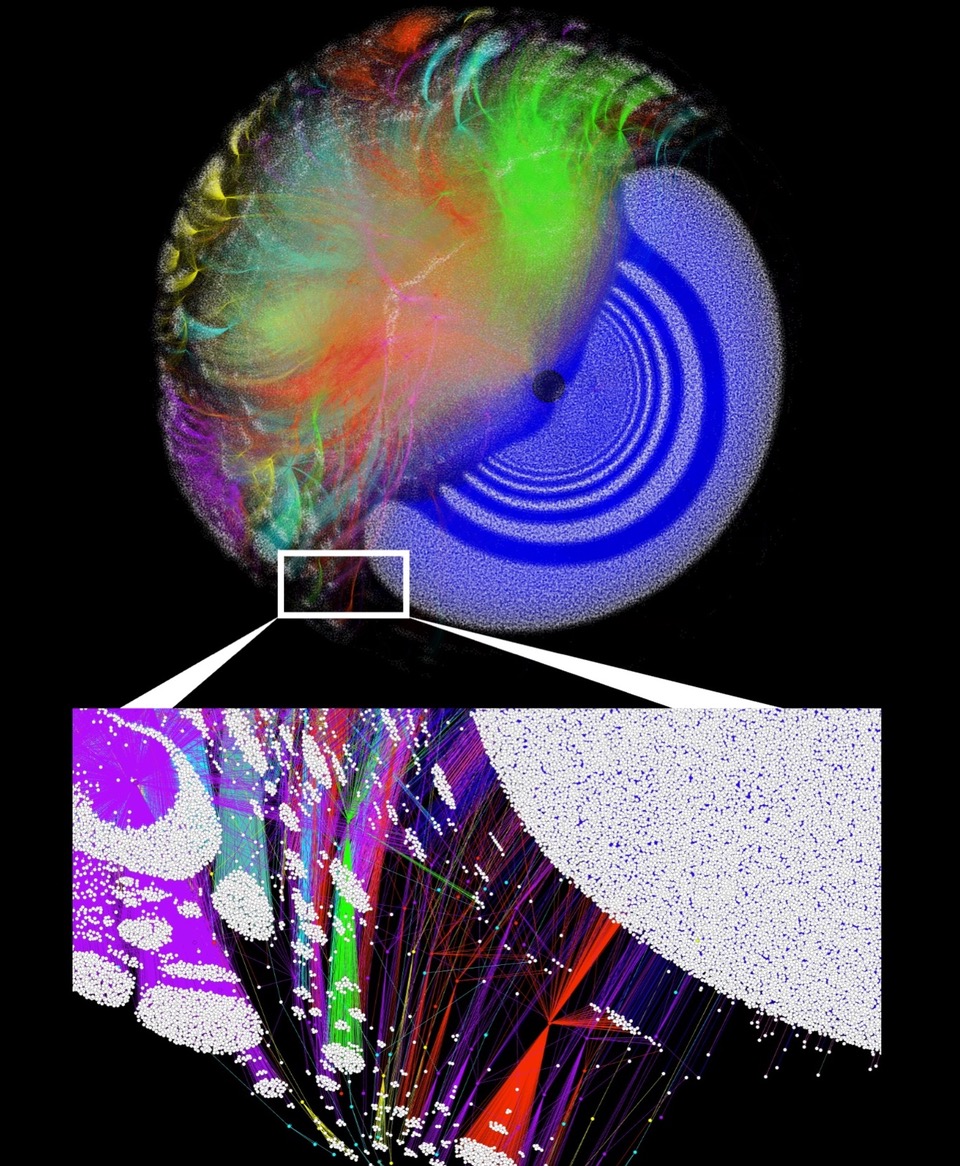

Neil Johnson, professor of physics, leads a new initiative in Complexity and Data Science which combines cross-disciplinary fundamental research with data science to attack complex real-world problems. He is an expert on how misinformation and hate speech spreads online and effective mitigation strategies. Johnson published new research this spring on bad-actor AI online activity in 2024. The study predicts that daily, bad-actor AI activity is going to escalate by mid-2024, increasing the threat that it could affect election results. Johnson says, “We had predicted summer as the time for AI to mature to a level to be misused by major bad actors and it certainly looks like it is starting at a big scale.”

David Broniatowski, associate professor of engineering management and systems engineering, is GW’s lead principal investigator of a relatively new NSF-funded institute called TRAILS that explores trustworthy AI. In this role, Broniatowski is leading one of the institute’s research goals in evaluating how people make sense of the AI systems that are developed, and the degree to which their levels of reliability, fairness, transparency and accountability will lead to appropriate levels of trust. He also conducts research in decision-making under risk, group decision-making, system architecture, and behavioral epidemiology. Broniatowski can discuss a number of topics related to AI’s role and use in spreading misinformation as well as efforts to combat misinformation online, including the challenges of tackling misinformation and how messages spread.

Law

Alicia Solow-Niederman, Associate Professor of Law at George Washington University Law School. Solow-Niederman is an expert in the intersection of law and technology. Her research focuses on how to regulate emerging technologies, such as AI with an emphasis on algorithmic accountability, data governance and information privacy. Solow-Niederman is a member of the EPIC Advisory Board and has written and taught in privacy law, government use of AI and the likes.

Aram Gavoor is the Associate Dean for Academic Affairs; Professorial Lecturer in Law; Professor (by courtesy), Trachtenberg School of Public Policy & Public Administration. Gavoor is an expert in American administrative law, national security and federal court. He can speak to the growing concerns of unregulated AI.

Spencer Overton is The Patricia Roberts Harris Research Professorship; Professor of Law. Overton also directs the GW Equity Institute’s Multiracial Democracy Project. In addition to his expertise in voting rights, Overton’s research focuses on AI deep fakes in elections having testified in Congress on the matter.

-GW-